Pipenv is primarily meant to provide users and developers of applications with an easy method to setup a working environment. It also generates the ever-important Pipfile.lock, which is used to produce deterministic builds. It automatically creates and manages a virtualenv for your projects, as well as adds/removes packages from your Pipfile as you install/uninstall packages. Windows is a first-class citizen, in our world. I'm happy(ish) with these tools, it's pipenv that is confusing me.Pipenv is a tool that aims to bring the best of all packaging worlds (bundler, composer, npm, cargo, yarn, etc.) to the Python world. *On Windows I intend to use NSSM to run the service, on Linux systemd. Ideally, I'd like this to be platform-independent, so I can use a similar workflow in Windows and Linux servers. I am looking for a deployment workflow using pipenv for Python software to be run as a service*. Instead, I had to create a batch file that activates the virtualenv and executes main.py, then point NSSM at that. Further, I have found that on Windows I can't get pipenv run to work from a service as it doesn't seem to activate the virtualenv. However, I see this can be changed with an environment variable to put the "virtualenv" inside the project.

I've experimented with this a bit more and realized that even when pipenv is installed at a system level, the "virtualenvs" are created at a user level. Is there something wrong with my approach? Is there a 'best practice' approach to using pipenv for deployment?

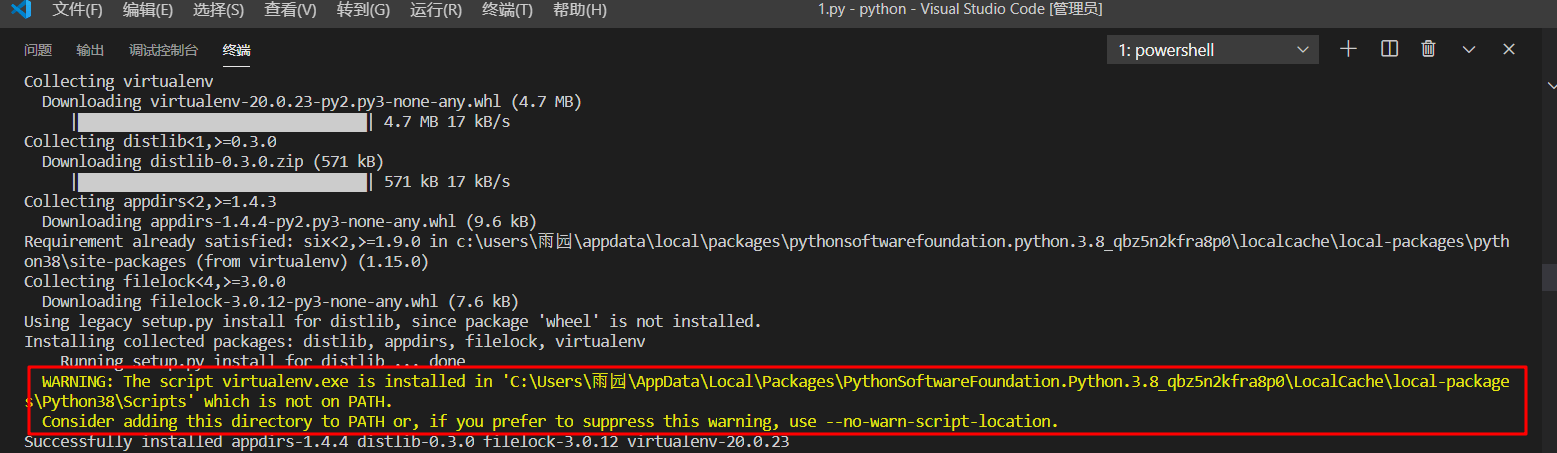

This all seems very odd, but perhaps I've missed something. It feels wrong to have a service pointing at a binary in my personal folders, similarly other users would have to install their own pipenv or add my folders to their paths. In both cases, the result is that the pipenv binary ends up in a user folder which has to be added to the PATH unless you want to reference it using the full path. However, the official docs advise a user install to 'avoid breaking system packages' pip install -user pipenv or via pipx. My aim is that the Python application will normally be run as a service (using nssm on Windows) but failing that can be run by any user without them having to install pipenv.

This has the advantage (I think) that any user or indeed a service user can run these commands. Then pipenv install and pipenv run as normal. I am trying to use pipenv to distribute a Python application to a server (in this case, it's Windows, but I have similar uncertainty with Linux). Cross-posted from Stack Overflow as it was possibly off-topic there.

0 kommentar(er)

0 kommentar(er)